Last spring, then-Engineering junior Stefan Zhelyazkov was walking through Hill Field when a curious thought suddenly came upon him.

He thought to himself, “I wish I could take a picture and understand the beauty of this picture, somehow figure it out.”

This semester, Zhelyazkov and two other computer science seniors in the School of Engineering and Applied Science have made that fantasy into a reality — an app that uses algorithms to “transform” a picture into music.

The team, which also includes Eric O’Brien and David McDowell, began researching for the project last year.

O’Brien, whom Zhelyazkov recruited not long after pitching his idea to his senior design class, matched Zhelyazkov’s enthusiasm. “The idea of converting a picture into some type of music and saying … ‘that picture sounds like that’ was a really awesome goal for us to have,” he said.

According to computer science professor Insup Lee, the senior projects instructor in the major, students are expected to synthesize what they have learned in all their Engineering classes.

However, senior design projects also draw inspiration from the team members’ outside interests.

O’Brien’s musical knowledge, for example — he sings in the Penn Glee Club and performs with Penn Pipers — was especially useful to a project combining music theory and computer science.

Related:

4/09/13: Computer Science department addresses overcrowding

4/02/13: 2005 College graduate switches paths to create Venmo

2/13/13: Penn student hackers finish apps

Research that marries music and science, though, has a long history. The team found that many scientists have investigated the emotional connection between music and color, including Aristotle and Isaac Newton.

“There are these scientists from the scientific revolution or ancient ages who tried to figure out the harmony in the world by relating things that evoke emotions — like colors, like music,” Zhelyazkov said.

One of the strongest intellectual currents in the project was the concept of harmony, which dates back to ancient Greece.

“[The Greeks] thought you could hear the music of the universe. If you lay down in field at night, you could hear the stars,” Zhelyazkov added. “Of course, some of these things are not scientifically accurate, but the way they thought about it philosophically was interesting.”

After comparing the work of earlier scientists in this field about which colors can evoke specific emotions, the team came to an interesting conclusion.

“Colors that are brighter, in the spectrum of red or yellow, [are] more warm and evoke certain positive emotions while colors that are darker evoke negative emotions,” Zhelyazkov said.

From this research, the team came up with a multi-layered approach to analyzing a picture and creating a song to match.

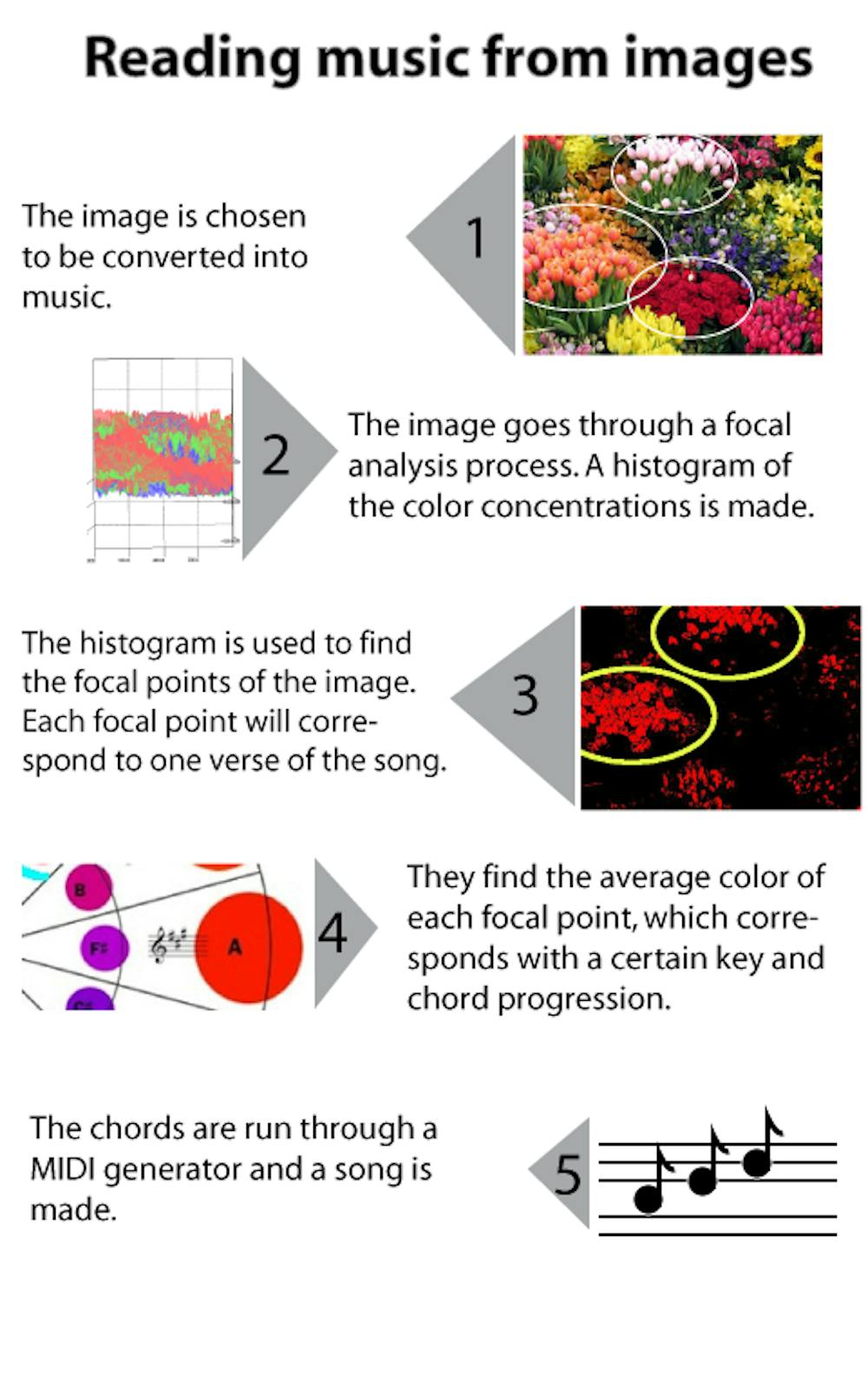

Many scientists doing similar work analyze images sequentially, looking at every pixel in an image when trying to convert it into music. The design team, on the other hand, decided to use what they termed “focal analysis,” analyzing the objects that attract the viewer’s attention in a given image.

This meant creating a three-dimensional histogram for the given picture — such as a bed of flowers — that shows the concentrations of red, blue and green light in the image.

“Every color can be broken down into how much red, how much green, [and] how much blue,” O’Brien said. “You map it out like a little graph.”

They then used the histogram to find the “focal points” of the image. Since the eye is drawn to areas in the image with the most contrast, these areas generally constitute the focal points.

“Contrast means that you have a section that is very different from what is around it, that is at the heart of our focal analysis,” Zhelyazkov said. “We try to find those objects of highly contrasting values. And then, through those red, green and blue values, we identified them properly.”

Based on several research papers, the team was then able to map colors to the circle of fifths — a visual representation of the twelve key signatures in music theory.

In order to capture the “mood” of the image used, the team decided to choose 18 standard chord progressions from classical and pop music.

According to earlier studies, each of these progressions creates music in a certain mood.

Zhelyazkoff and his team analyzed the focal points in a particular image and calculated each one’s average color.

The average color gave them both the starting key, according to the circle of fifths color wheel, and the mood, which indicates the appropriate chord progression. Each focal point’s resulting music gives one verse of the song.

The team also generated transitional progressions in the same mood to connect the verses. These progressions serve as choruses.

Zhelyazkov said this formula for creating verses and choruses is inspired by the way a person looks at objects in an image.

“You see one object and then your eyes move away and they spot the second object,” he said.

While the team continues to perfect its app, eventually users will be able to “take pictures and then listen to the things you see,” Zhelyazkov added.

Related:

4/09/13: Computer Science department addresses overcrowding

4/02/13: 2005 College graduate switches paths to create Venmo

2/13/13: Penn student hackers finish apps